Have you ever considered having a conversation with an intelligent computer? Or perhaps you’re interested in creating innovative projects with AI? Well, take a look at Mistral in Laravel! It’s the newest brilliant computer program developed by a company in France.

What is Mistral

Mistral AI, an AI company based in France, is on a mission to elevate publicly available models to state-of-the-art performance. They specialize in creating fast and secure large language models (LLMs) that can be used for various tasks, from chatbots to code generation.

What is Ollama

Ollama allows you to run open-source large language models, such as Mistral, locally.

Ollama bundles model weights, configuration, and data into a single package, defined by a Modelfile. It optimizes setup and configuration details, including GPU usage.

For a complete list of supported models and model variants, see the Ollama model library.

Setup

First, follow these instructions to set up and run a local Ollama instance:

1) Download and install Ollama onto the available supported platforms (including Windows Subsystem for Linux)

2) Fetch available LLM model via ollama pull <name-of-model>

-

- View a list of available models via the model library

3) This will download the default tagged version of the model. Typically, the default points to the latest, smallest-sized-parameter model.

On Mac, the models will be download to

~/.ollama/modelsOn Linux (or WSL), the models will be stored at

/usr/share/ollama/.ollama/models.View the GitHub Repository for more commands. Runollama helpin the terminal to see available commands too.

Overview of Mistral AI Models

Here’s a quick overview of these two highly anticipated Mistral AI models:

1) Mistral 7B is the first foundation model from Mistral AI, supporting English text generation tasks with natural coding capabilities. It is optimized for low latency with a low memory requirement and high throughput for its size. This model is powerful and supports various use cases from text summarization and classification, to text completion and code completion.

2) Mixtral 8x7B is a popular, high-quality sparse Mixture-of-Experts (MoE) model that is ideal for text summarization, question and answering, text classification, text completion, and code generation.

Key Features

1) Balance of cost and performance — One prominent highlight of Mistral AI’s models strikes a remarkable balance between cost and performance. The use of sparse MoE makes these models efficient, affordable, and scalable while controlling costs.

2) Fast inference speed — Mistral AI models have an impressive inference speed and are optimized for low latency. The models also have a low memory requirement and high throughput for their size. This feature matters most when you want to scale your production use cases.

3) Transparency and trust — Mistral AI models are transparent and customizable. This enables organizations to meet stringent regulatory requirements.

4) Accessible to a wide range of users — Mistral AI models are accessible to everyone. This helps organizations of any size integrate generative AI features into their applications.

Mistral Installation

In Windows: https://ollama.com/download/windows

For Mac: https://ollama.com/download/mac

For Linux:

Install it using a single command:

curl -fsSL https://ollama.com/install.sh | sh

Ollama Supports a list of models available on Ollama Library

Please keep in mind that running the 7B models requires a minimum of 8 GB of RAM, while the 13B models need 16 GB, and the 33B models need 32 GB.

After Ollama installation, you can easily fetch any models using a simple pull command.

So after completing the pull command, you can run it directly in the terminal for text generation.

ollama run mistral

Ollama also offers a REST API for running and managing models.

See the API Documentation for the endpoints.

How can we use it in Laravel

So we are going to showcase an example of Bagisto which is an open-source Laravel eCommerce framework that offers robust features, scalability, and security for businesses of all sizes. Combined with the versatility of Vue JS, Bagisto offers a seamless shopping experience on any device.

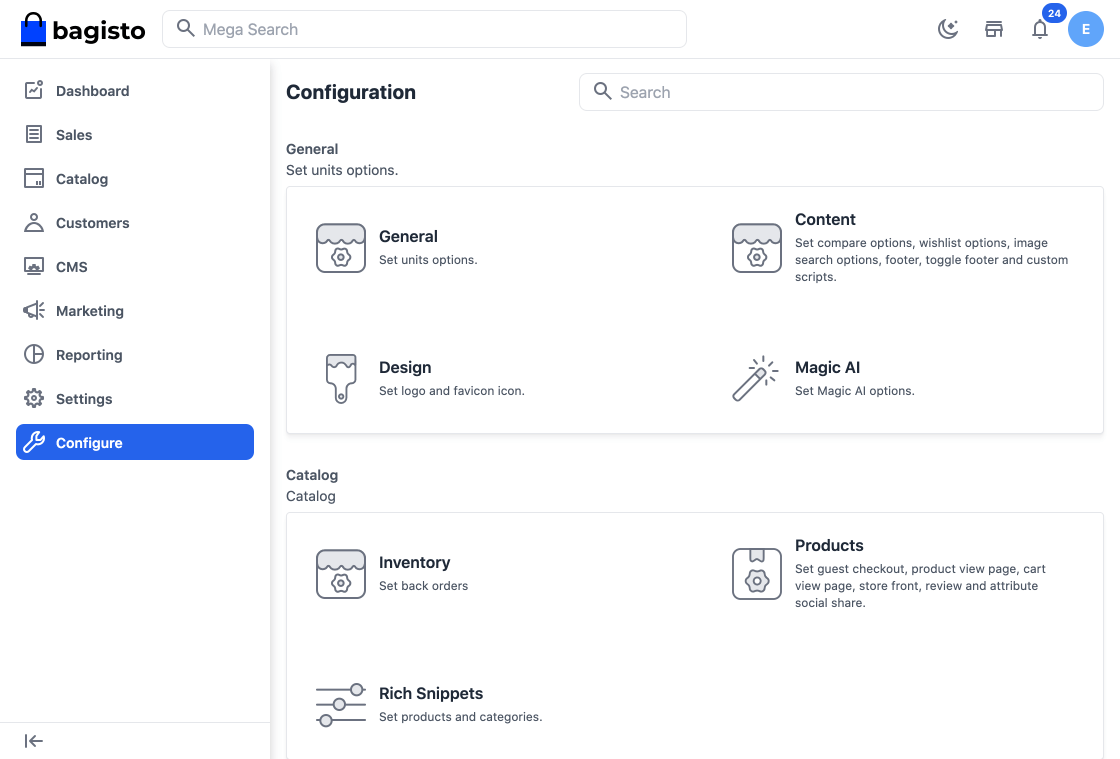

Step 1 – Login to the Admin Panel of Bagisto and go to Configure >> Magic AI as shown below.

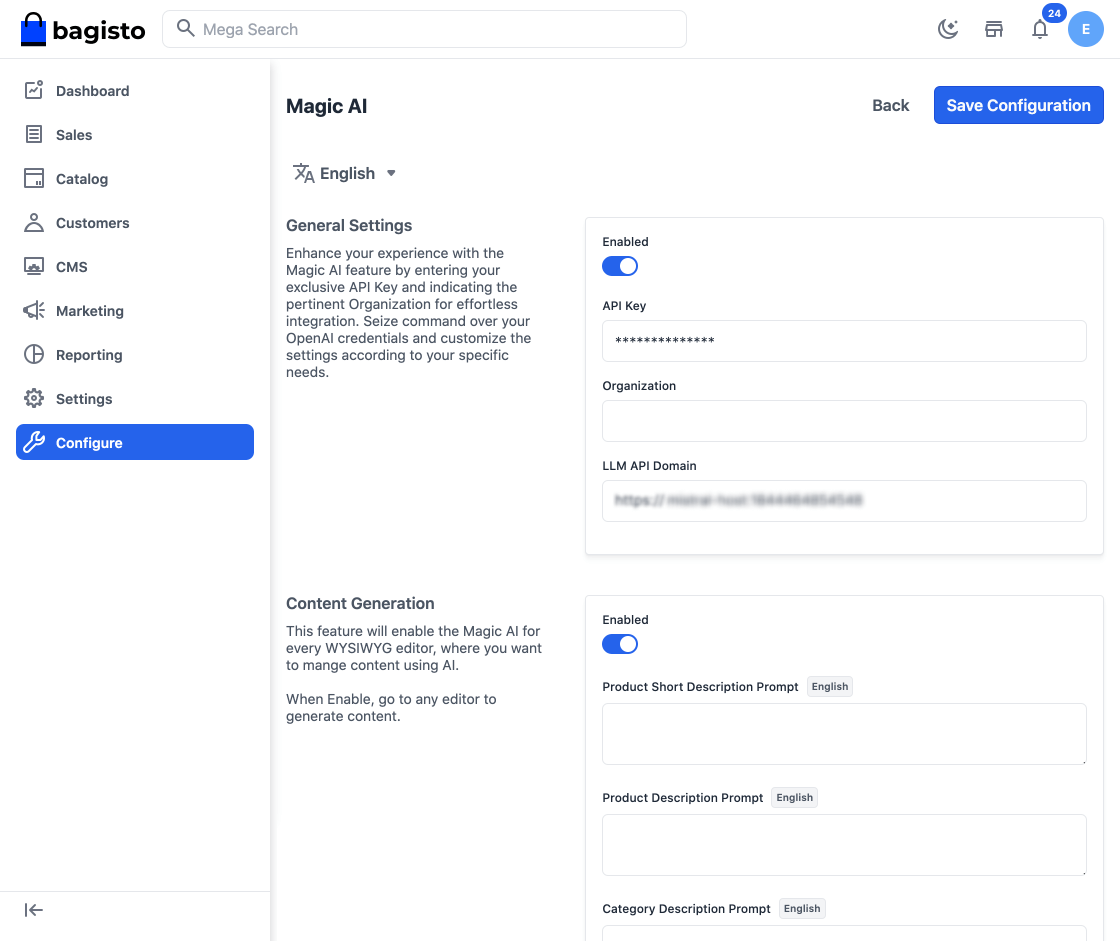

Step 2 – Now kindly Enable the Configurations for which you want to generate using Magic AI i.e. General Settings, Content Generation as shown in the below image.

Content Generation

With Magic AI, store owners can effortlessly generate engaging product, category, and CMS content.

Note:- In Bagisto 2.1.0 it provides Native Support to various LLMs.

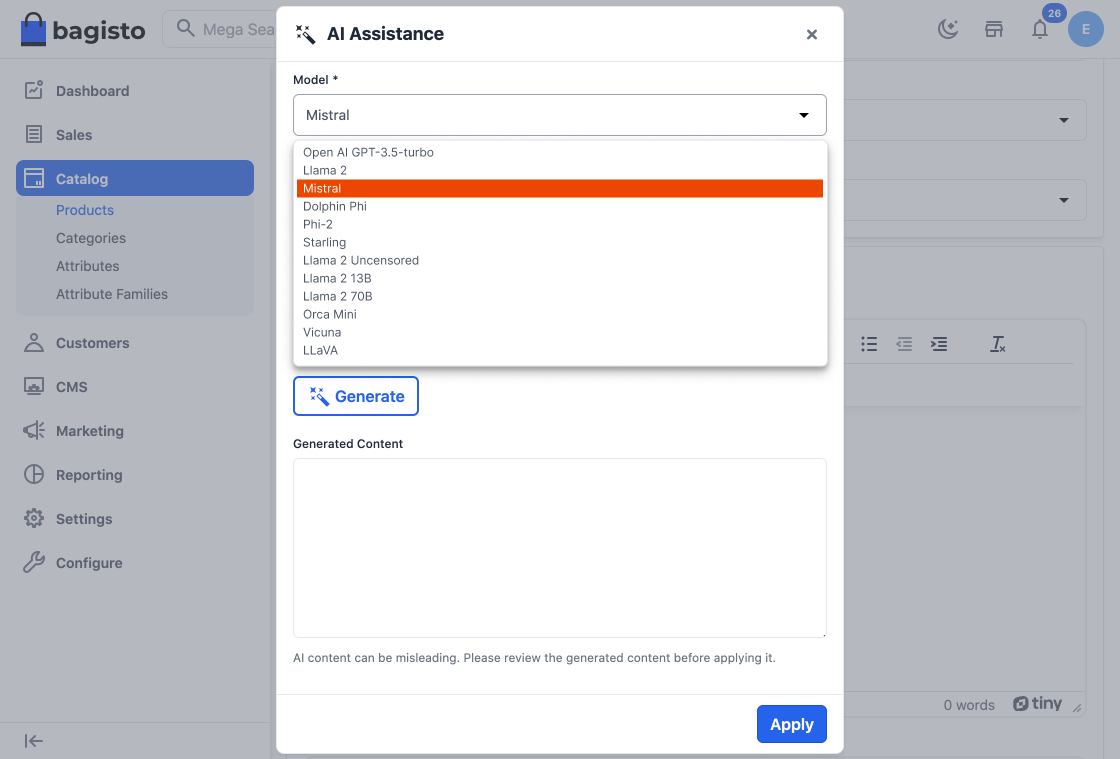

A) For Content – OpenAI gpt-3.5-turbo, Llama 2, Mistral, Dolphin Phi, Phi-2, Starling, Llama 2 Uncensored, Llama 2 13B, Llama 2 70B, Orca Mini, Vicuna, LLaVA.

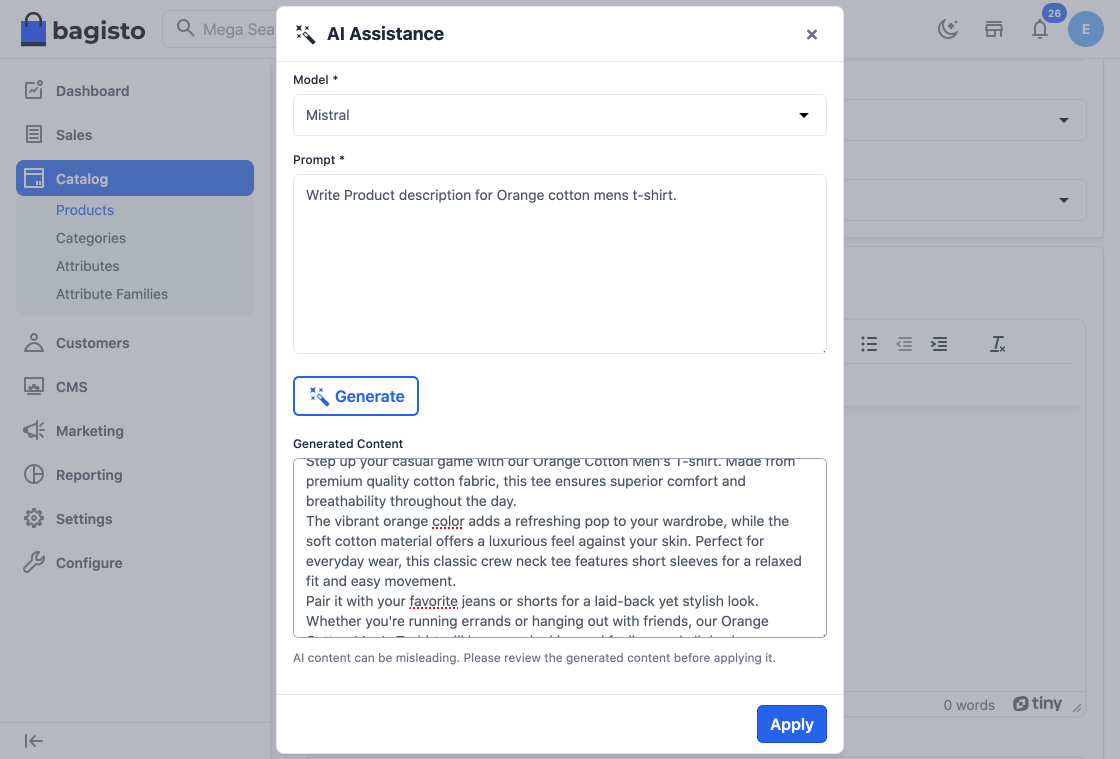

So as you can see in the below image, content has been generated.

So now say goodbye to time-consuming manual content creation as Magic AI crafts compelling and unique descriptions, saving you valuable time and effort.

Thanks for reading this blog. Please comment below if you have any questions. Also, you can Hire Laravel Developers for your custom Laravel projects.

Hope it will be helpful for you or if you have any issues feel free to raise a ticket at our Support Portal

Be the first to comment.