In this blog, we will talk about AI Chatbots, we will get their workings, essentials, and the key parts that bring them to life.

Before starting that, we should understand how we can leverage our e-commerce business with Chabtot.

Recently Bagisto has added a new product AI Chatbot. From simple customer service assistants to cutting-edge AI-powered conversational agents, chatbots have become an integral part of our digital landscape, transforming the way we interact with technology and businesses.

Here are a few use cases of how an AI Chatbot can help grow your business and customer satisfaction:

- A 24/7 assistant on your e-commerce: AI Chatbot can serve as a 24/7 assistant on your e-commerce platform, it can help users get answers to their queries in a few seconds.

- Policies-related Queries: AI Chatbot can answer users’ queries regarding your e-commerce policies like return policy within seconds.

- Products-related Queries: Users don’t need to go through hundreds of products, rather AI Chatbot can return the exact products with their URLs.

- Discount-related Queries: Users love to know whether discounts are available or not and the chatbot can help them.

- Customer engagement: Fast responses from AI Chatbot will grow the user’s engagement on your e-commerce platforms.

- Saves customer Time: Faster solutions to their queries and ease in finding the desired products will save a lot of time for users

So let’s start our journey to understand the world of Chatbots:

Let’s start by understanding the Chatbot

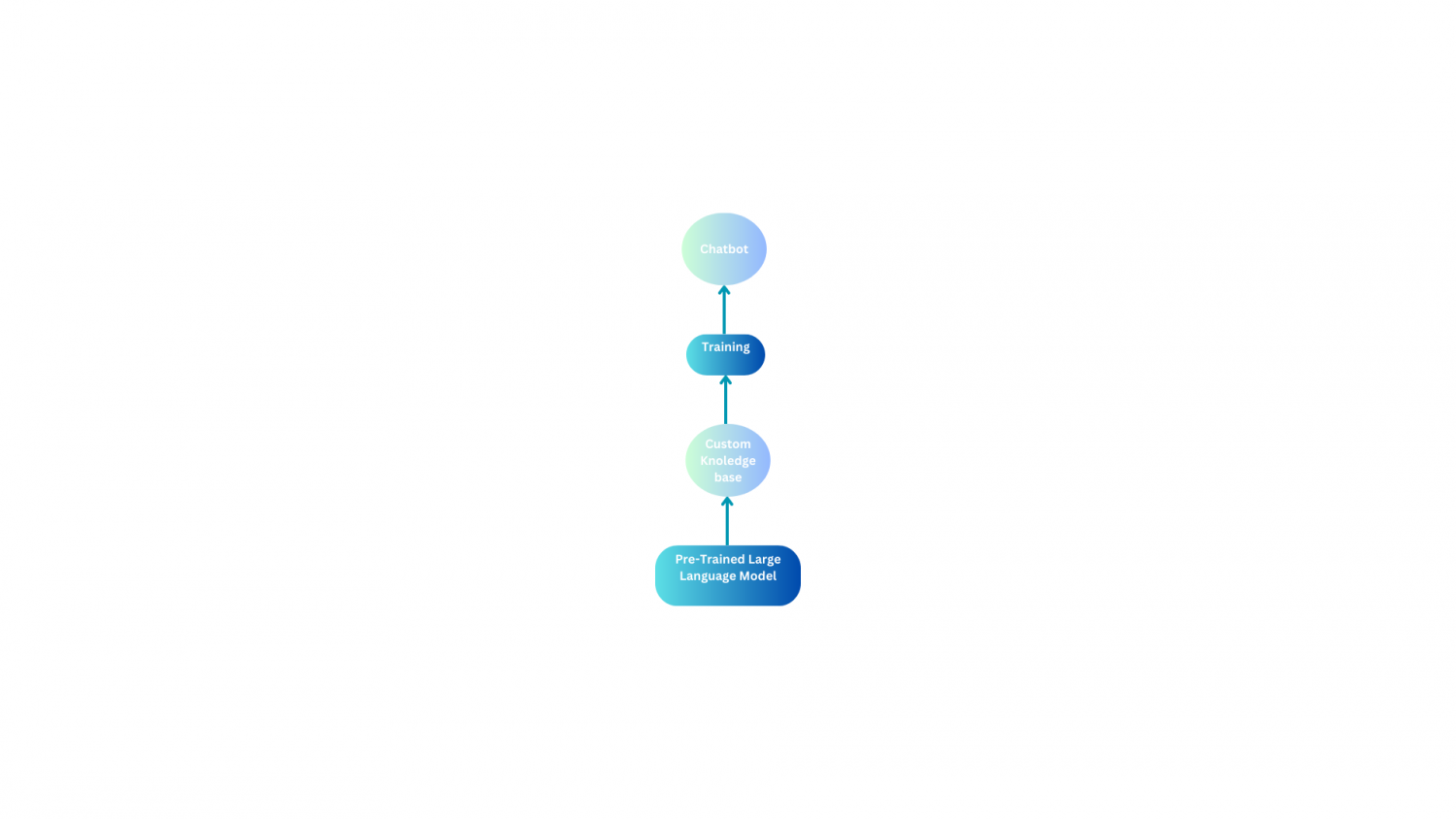

An AI chatbot is nothing but a pre-trained Large Language Model that is again trained on custom data to handle user queries.

Understanding Large Language Model

A large language model is a model that can understand human language. It is a Neural Network that is trained on Millions of text documents for hundreds of hours on large number of GPUs.

Why pre-trained large language model?

As we have discussed how an LLM is trained, it is very costly, computationally expensive, and time-consuming, and a highly skilled team of Data Engineers and Data Scientists is required.

And once an LLM is trained it can be reused very easily, so it is highly preferred to use a pre-trained LLM rather than training it again.

To use a pre-trained LLM like OpenAI, we need to provide its OpenAI key. Bagisto has provided the option to enter the API key and use the Chatbot.

Structure of a Chatbot

Custom Knowledge Base

The custom Knowledge base is the text data from which you want the Chatbot to answer the queries. Suppose you want to deploy a chatbot on your e-commerce website, you would be willing to train the chatbot to answer queries of users regarding your return policy or the products/services offered by you.

So in that case, your knowledge base would be the data containing the return policy and list of products/services offered by you.

|

1 2 3 4 |

def load_knowledge_base(file_path): loader = TextLoader(file_path) documents = loader.load() return documents |

Training the Chatbot on Custom Data

Now this is the tricky part. The knowledge base can be huge for e.g it can consist of 50000 words, but the pre-trained LLMs like OpenAI have token limits. Token limit is nothing but word limits that an LLM can handle at once.

So, the token limit of the latest OpenAI LLM has a token limit of 16000, so how are we going to train the chatbot to answer queries from data of 50000 words? Here comes the Langchain.

Langchain

LangChain is a framework for developing applications powered by language models. It is written in Python and TypeScript and can be used in a variety of environments, including Node.js, Cloudflare Workers, and the browser.

|

1 2 3 4 5 6 7 8 9 10 11 |

from langchain.embeddings.openai import OpenAIEmbeddings from langchain.text_splitter import CharacterTextSplitter from langchain.vectorstores import Chroma from langchain.document_loaders import TextLoader from langchain.docstore.document import Document from langchain.prompts import PromptTemplate from langchain.indexes.vectorstore import VectorstoreIndexCreator from langchain.chains.question_answering import load_qa_chain from langchain.llms import OpenAI |

Solving the issue of the token limit with the help of Langchain

Langchain trains the data into smaller chunks using CharacterTextSplitter and stores all the chunks in the form of embeddings(Vectors). Now whenever a query is asked by the user it first checks which chunk of text is most related to the user’s query, after that, it sends that chunk along with the query to the LLM to generate the answer.

|

1 2 3 4 5 6 7 8 9 10 |

def split_data_to_chunks(documents): if os.path.exists("chunks.pkl"): with open("chunks.pkl", "rb") as file: docs=pickle.load(file) else: text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0) docs = text_splitter.split_documents(documents) with open("chunks.pkl", "wb") as file: pickle.dump(docs, file) return docs |

|

1 2 3 |

def compute_embeddings(docs): embeddings = OpenAIEmbeddings(openai_api_key= openai_api_key) return embeddings |

How does Langchain find the most related text chunk to the user’s queries?

Actually, computers only understand numbers, so each word is stored in the form of long vectors, which are also called embeddings. So to find the most related chunk of text, it uses an algorithm, which is nothing but a mathematical equation that finds similarity between two vectors. This algorithm is called Cosine Similarity.

So, it converts all the text chunks into vectors and stores them in the form of a vector database. When a query is asked, it converts the query into a vector. After that, Vector Store applies the cosine similarity between the query vector and each text chunk vector and returns the most similar text chunk.

|

1 2 3 |

def make_vector_database(docs, embeddings): db = Chroma.from_documents(docs, embeddings) return db |

Understanding Vector Store

Vectorstores retrieve the most similar text from the vector database. All the vector Database end functions are performed by vector stores. Langchain provides a variety of Vector Stores.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

def store_and_retrieve_embeddings(docs,db, embeddings): persist_directory = 'db' vectordb = Chroma.from_documents(documents=docs, embedding=embeddings, persist_directory=persist_directory) vectordb.persist() vectordb = None vectordb = Chroma(persist_directory=persist_directory, embedding_function=embeddings) retriever = db.as_retriever(search_type="mmr") |

Now, finally run the Chatbot

|

1 2 3 4 5 6 |

def chatbot(query,retriever): docs=retriever.get_relevant_documents(query)[0] #input_documents = [doc for doc in docs] chain = load_qa_chain(OpenAI(openai_api_key=str(openai_api_key),temperature=0), chain_type="stuff") response = chain.run(input_documents=[docs], question=query) print("AI Agent:- ",response) |

Here are a few key points for using Bagisto’s AI Chatbot

In Bagisto’s AI Chatbot, we have provided the option to choose between the most efficient Vector Store. There is an option to select between Chroma and Pinecone Vector Store.

After choosing the Vector Store, you have to provide the Vector Store API Key to use that Vector Store.

With these simple steps, harness the power of AI effortlessly and unlock a seamless conversational experience with Bagisto’s AI Chatbot. Leave the technical intricacies to us; now you can focus on engaging your customers and enhancing your online store like never before!

Be the first to comment.