Today, we are going to explore some techniques by which you can minimise the cost of custom dataset training in OpenAI ChatGPT

OpenAI’s ChatGPT is one of the powerful language model tools; generating human-like text. So is the cost associated with it for its usage. However being one of the breakthrough solutions, it cannot be used for specific use cases involving custom data. However, we can still work on methods to minimize the cost of dataset training in OpenAI.

How Can You Minimize the Cost of Custom Dataset Training?

In this blog, we will explore strategies to minimize the cost of dataset training in OpenAI ChatGPT without compromising the quality of the results.

Define Clear Objectives

It’s very important to define clear and precise objectives for which you will be training the custom data set. Once the objectives are clear, you may begin with the data training process to get the desired results.

Here are some tips that you follow to outline your objective:

- Be clear on the results you are expecting

- Break down your objectives into smaller tasks

- Make sure that the objectives are feasible and timebound

Curate a Representative Dataset

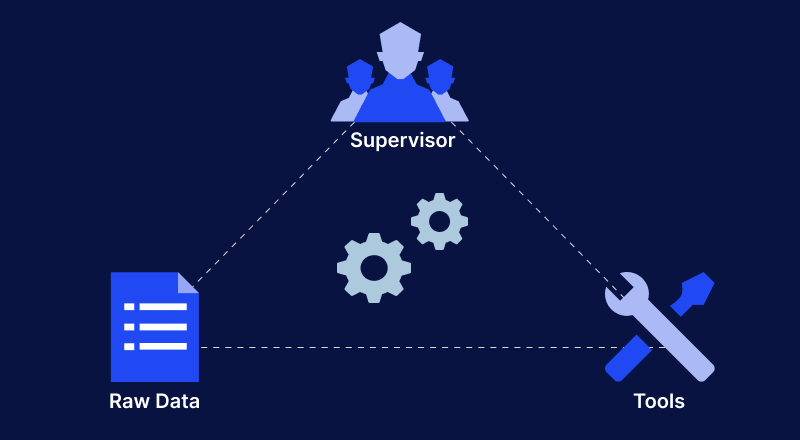

Creating a high-quality custom dataset is very important in the training of the model. You need to make sure that the representative dataset with which the model needs to be trained should be accurate, free from errors and must be enough to be fed.

The data should also be well-labelled and represented for the model to be trained.

Data Preprocessing and Cleaning

Before training your model with the custom data set, make sure that the data set is preprocessed with the right information and sanitized with errors. The data should also be in the right format for the model to be trained and understood better.

For example, you may need to clean up text data by removing punctuation or special characters, or you may need to convert numerical data into a format that is compatible with the training algorithm. Additionally, you may need to split the data into training and testing sets, so that you can evaluate the performance of the training algorithm.

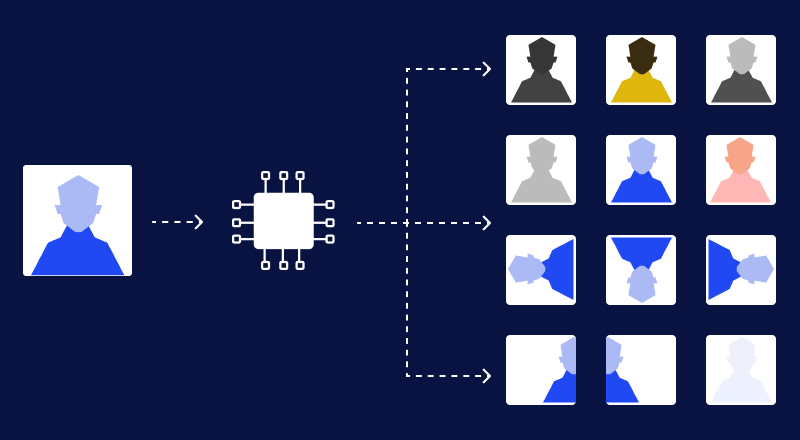

Data Augmentation

You need to make sure that you have enough data for which the model needs to be trained. For that, you don’t need additional data but by creating data from various endpoints of existing data sets. This helps the model to connect the dots with various data provided in the data sets.

You can achieve the process by either making some minor changes in the existing data sets or making use of deep learning to generate new data points.

Transfer Learning

To make the model training more effective, the model is first trained on a large dataset. After that, the model is further trained on a small subset of data. This training of the model on a specific small custom dataset saves a lot of cost and time.

The reason is, that it’s already been trained on large datasets before, so it does not have to start from scratch. This makes the model accurate in prediction as it already has learned some features related to the task.

For example, if you want to build a model to classify images of cats and dogs, you could start with a pre-trained model that has been trained on a large dataset of images of objects. This model would have already learned some of the features that are important for image classification, such as edges, shapes, and colours.

You could then fine-tune this model on a smaller dataset of images of cats and dogs. This would help the model learn the specific features that are important for classifying cats and dogs.

Utilize Smaller Models

Smaller models are much easier to train in comparison to larger models. These models are trained in a small dataset and hence are more accurate to the specific tasks.

Also, since these models required small parameters to be trained upon, this saves time and cost of training duration. The fewer parameters make these small models easy to understand and generate predictions.

Choose an appropriate model size that suits your needs

It’s very important to choose the right model to work upon to save your resources.

Here are the following points you can consider before choosing the model:

- Define task complexity – The more complex is a task, the larger the model may need to be used

- Data aggregation: The more datasets to be analysed, the larger the model to be used

- Resource availability: You need to check for the resources available in terms of computing power required to train and run the model

It’s important to note that, it’s not just the size of the model but the accuracy of data that is also taken into account for proper model training. By having the right model in place, you can minimize the cost of custom dataset training to a large extent.

Control Training Parameters

Training parameters greatly define how accurate your model will be. Certain parameters are usually taken into account while training the amount of dataset, batch size, learning rate etc. By controlling the training parameters, you can manage the model performance to a vast extent.

For example, if the learning rate is slow, the model may not learn very well. If the learning rate is too high, it may skip a lot of data. Training parameters thus need to be carefully managed for the better performance of the models.

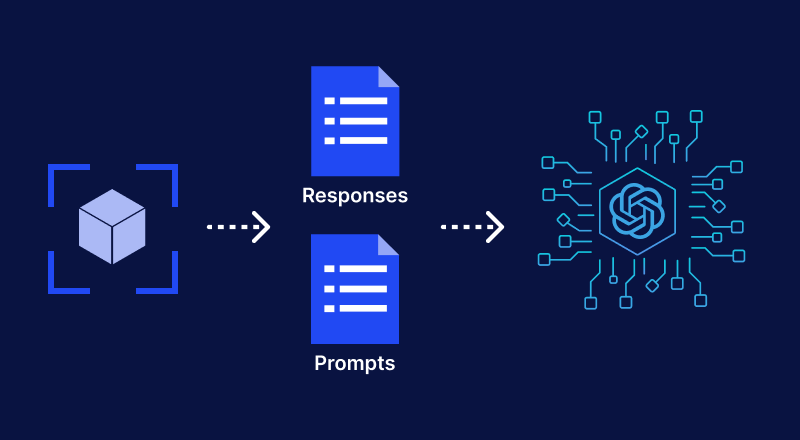

Fine-tuning the model

It’s very important to fine-tune the model with utmost care. You need to make sure that the model is trained with the respective dataset for which you need to work.

The performance of the model should be monitored regularly so as to make any changes if needed at any stage of training.

Cloud Computing and GPUs

You can make use of cloud computing platforms that offer GPU for the training process. GPUs accelerate the training process thus saving you cost and prolonged training sessions compared to normal CPU.

Training a custom dataset can be a very costly and time-consuming process. However, with the right selection of models, data and resources, you can optimize the training process and minimise the cost of custom dataset training in OpenAI CHatGPT.

Be the first to comment.