The EU AI Act is the first complete legal system for AI in the world. It positions Europe as a leader in creating responsible AI development.

It guarantees that AI systems are safe, ethical, and reliable. The Act encourages innovation while safeguarding basic rights.

The law divides AI into different risk levels: Unacceptable, High, Limited, and Minimal. It was approved in 2024 and will start being enforced gradually from 2025.

What Is the EU AI Act?

The EU AI Act is a set of laws that control AI systems in the European Union. It started on August 1, 2024.

This act manages how AI is developed, used, and put into action in different areas.

It affects both companies from the EU and those from outside the EU that provide AI services in the EU.

Objectives of the EU AI Act

The law is designed to make sure that AI systems are safe, clear, and fair. Its goal is to create trust among the public in AI.

It requires that humans oversee important AI systems and protect rights and ethics.

By encouraging responsible AI usage in areas such as healthcare and transportation, it seeks to find a balance between risk and new ideas.

Risk Based Classification of AI Systems

AI systems are divided into four risk categories according to the possible harm they can cause.

1) Unacceptable Risk (Banned)

These AI systems pose a danger to democratic values and are completely prohibited, with any violations resulting in large fines and strict enforcement.

These systems are not allowed because they can harm basic rights:

- Manipulating behavior to secretly affect decisions.

- Taking advantage of weak groups such as kids or people with disabilities.

- Rating people based on their personal information or actions.

- Recognizing emotions in jobs or schools.

2) High Risk (Strict Regulations)

These systems require risk assessments, supervision by people, clear records, and informing users—if not followed, it could result in fines or being banned.

These systems need to follow strict rules because they can affect safety and people’s rights:

- Biometric identification for getting into important places (like airports and banks)

- AI used for hiring, checking resumes, or watching employees

- AI systems used by the police for predicting crime or analyzing evidence

- AI in schools for grading or providing access to learning opportunities

3) Limited Risk (Transparency Required)

People need to know when they are using AI systems. Deepfakes should have clear labels.

AI systems that copy emotions must say what they are trying to do. This level is all about making sure users are aware and give their consent.

4) Minimal or No Risk (Unregulated)

These systems carry a very low risk and are generally not under strict regulations. Examples include spam filters and AI in video games.

They don’t require special rules but still have to comply with general EU laws. Developers are urged to adhere to voluntary guidelines.

Tools with minimal risk make up most of the AI applications we see today.

Key Provisions of the Act

The Act prohibits some dangerous AI activities and controls others depending on their risk level.

Providers of high risk AI have to meet tough rules such as managing risks, ensuring data quality, maintaining cybersecurity, and having human supervision.

For limited risk AI and general purpose AI, being transparent is a must, which means they have to reveal when content is created by AI.

Some rules started in the middle of 2025, high risk requirements will kick in during 2026, and everything needs to be fully compliant by the middle of 2027.

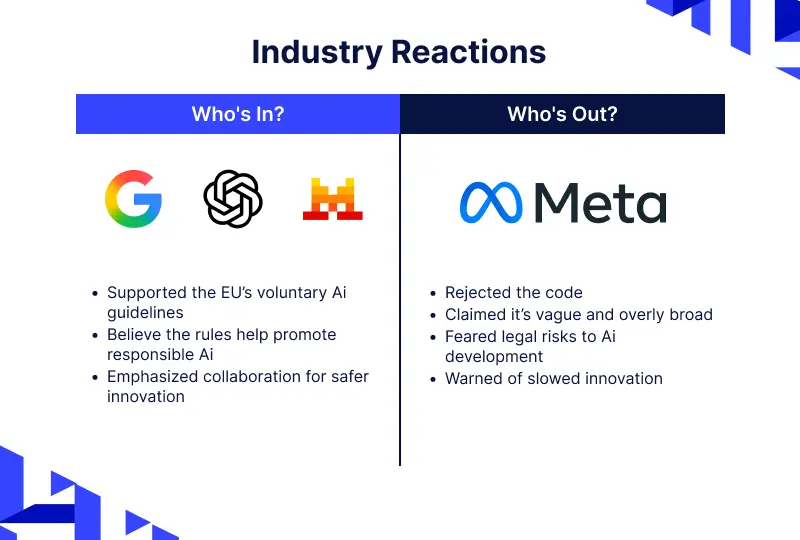

Industry Reactions: Who’s In and Who’s Out?

The EU AI Act has caused different opinions in the global tech world, showing big disagreements about how to regulate AI.

Companies like Google, OpenAI, and Mistral supported the EU’s voluntary AI guidelines, thinking it would help make sure AI is used responsibly in Europe.

However, Google cautioned that the Act might slow down innovation because of copyright issues, delays in getting approvals, and worries about trade secrets.

On the other hand, Meta turned down the code, saying it is unclear and too broad, with legal problems that could hurt AI development.

More than 40 big companies in Europe, like Bosch and Siemens, asked the EU to postpone the enforcement of rules so they can take a closer look.

Impact on Businesses and Developers

The Act impacts companies from both the EU and outside the EU that use AI tools in the EU.

Startups and small to medium sized enterprises (SMEs) have extra challenges when it comes to following the rules.

But getting compliant early can give a business a competitive advantage. Having experience with GDPR can help companies get ready in a smart way.

Global Implications

The EU AI Act has an impact on AI rules around the world. Canada’s AIDA reflects its ideas.

It establishes worldwide standards for safe and responsible AI. It also promotes consistent international guidelines.

Challenges & Criticism

Some critics argue that the Act could hinder innovation and make things more complicated for small businesses.

Others think it’s too early to regulate rapidly changing technology.

People worry about excessive regulation, face challenges enforcing the rules, and lack a fair global approach.

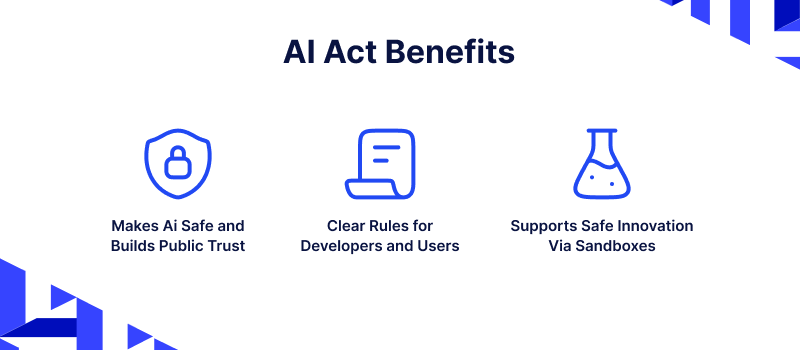

Opportunities & Benefits

The Act makes AI safer and helps people trust it more. It provides clear legal guidelines for both developers and users.

It encourages responsible innovation and allows for testing new ideas in a safe environment through regulatory sandboxes.

How It Benefits Users

The EU AI Act focuses on people in AI development by making sure that safety, fairness, and transparency are important in every interaction.

-

Protects Fundamental Rights: It bans harmful AI uses like mass surveillance and emotional manipulation, safeguarding users’ privacy and dignity.

-

Improves Transparency: People need to know when they are using AI systems like chatbots, recommendation tools, or deepfakes.

-

Builds Trust: Having clear rules and being accountable helps users feel more confident and encourages them to use AI technologies responsibly.

-

Promotes Fairness: By minimizing bias in important areas such as hiring, education, and public services, the Act guarantees fair treatment for everyone.

-

Empowers Consumers: Individuals have the right to comprehend decisions made by AI and to question results they think are unjust or wrong.

Penalties for Non Compliance

Fines can reach as high as €35 million or 7% of total global sales. The exact amount varies based on how serious the issue is and its risk level.

National and EU regulators will handle the enforcement. Major violations might also lead to bans or product recalls.

How to Prepare for the EU AI Act

To follow the rules properly, organizations need to:

-

Assess & Categorize: Examine all AI systems, both existing and in development, categorize by risk, and identify compliance issues.

-

Organize & Allocate: Create teams for compliance, get legal, tech, and business departments involved, and make sure resources are allocated correctly.

-

Implement & Align: Establish ways to manage risks, make sure data is handled responsibly, and include compliance in the development process.

-

Train & Adapt: Educate teams how to talk about compliance in a clear way, follow GDPR whenever they can, and keep up with changes in regulations.

Conclusion:

The EU AI Act is the first of its kind to create rules for using AI in a responsible way. It finds a balance between encouraging new ideas and protecting people’s rights.

This act provides a guide for developing AI that is safe and ethical. Working together and being flexible will be important for making it work successfully.

Be the first to comment.